Voici un aperçu des projets qui ont été présentés par le LPVS au prestigieux congrès de Vision Science Society qui rassemble des chercheurs d’un large éventail de disciplines contribuant à l’avancement scientifique en vision dont la psychologie visuelle et perceptive, les neurosciences, la vision computationnelle ainsi que la psychologie cognitive. Le contenu scientifique des présentations reflète la diversité des sujets du domaine de la vision allant du codage visuel à la perception, en passant par le contrôle visuel de l’action et le développement de nouvelles méthodologies en psychologie cognitive, en vision par ordinateur ou en neuroimagerie.

Séance d’affiche – Visages et mécanismes neuronaux

N170 sensitivity to the horizontal information of facial expressions

N170 sensitivity to the horizontal information of facial expressions

Justin Duncan1,2, Frédéric Gosselin3 , Caroline Blais1 , Daniel Fiset1

1 Université du Québec en Outaouais, 2 Université du Québec à Montréal, 3 Université de Montréal

VOIR AFFICHE

The N170 event-related potential, which is preferentially tuned to faces (see for review Rossion, 2014), has been linked with processing of the eyes (Rousselet, Ince, van Rijsbergen & Schyns, 2014), of diagnostic facial features of emotions (Schyns, Petro & Smith, 2007), and of horizontal facial information (Jacques, Schiltz & Goffaux, 2014). Recent findings have shown that horizontal information is highly diagnostic of the basic facial expressions, and this link is best predicted by utilization of the eyes (Duncan et al., 2017). Given these findings, we were interested in how N170 amplitude relates with spatial orientations in a facial expressions categorization task. Five subjects each completed 7,000 trials (1,000 per expression) while EEG activity was measured at a 256 Hz sampling rate. Faces were randomly filtered with orientation bubbles (Duncan et al., 2017) and presented on screen for 150ms. Performance was maintained at 57.14%, using QUEST (Watson & Pelli, 1983) to modulate stimulus contrast. The signal was referenced to the mastoid electrodes and bandpass filtered (1-30 Hz). It was epoched between -300 and 700 ms relative to stimulus onset, and eye movements were removed using ICA. Single-trial spherical spline current source density (CSD) was computed using the CSD toolbox (Kayser & Tenke, 2006; Tenke & Kayser, 2012). Our main analysis consisted in conducting a multiple linear regression of singletrial orientation filters on PO8 voltages at each time point. The statistical threshold (Zcrit= 3.6, p< .05, two-tailed) was established with the Stat4CI toolbox (Chauvin et al., 2005). We found a negative correlation between horizontal information availability and voltage (Zmin= -5.43, p< .05) in the 50ms leading up to the N170’s peak. Consistent with the proposition that the N170 component reflects the integration of diagnostic information (Schyns, Petro & Smith, 2007), the association between horizontal information and amplitude was strongest 25 ms before the peak, and completely disappeared at peak.

Remerciement: Conseil de recherches en sciences naturelles et en génie du Canada (CRSNG)

Cortical activation of fearful faces requires central resources: multitasking processing deficits revealed by event-related potentials

Cortical activation of fearful faces requires central resources: multitasking processing deficits revealed by event-related potentials

Amélie Roberge1 , Justin Duncan2-3, Ulysse Fortier-Gauthier1 , Daniel Fiset2 , Benoit Brisson1

1 Département de psychologie, Université du Québec à Trois-Rivières, 2 Département de Psychoéducation et de Psychologie, Université du Québec en Outaouais, 3 Département de Psychologie, Université du Québec à Montréal

To investigate if emotional face processing requires central attention, a psychological refractory period paradigm was combined with the event-related potential (ERP) technique. Participants were asked to categorize tones as high (900 Hz or 2000 Hz) or low (200 Hz or 426 Hz) as quickly and accurately as possible and then to indicate if a face expressed fear or a neutral expression. Stimulus onset asynchrony (SOA) between the presentation of the tone and the face was manipulated (SOA: 300, 650 or 1000ms) to vary the amount of central attention available to perform the face expression task (less central attention available at short than long SOAs). The amplitude of frontally distributed ERP components associated to emotional face processing (computed as the difference between fear and neutral conditions: Eimer & Holmes, 2007) were measured at all SOAs. The first component (175-225 ms post-visual stimulus onset), which is thought to reflect rapid initial detection of the emotion, was not affected by SOA, F(2,50) = 2.24, p = .12. However, a significant effect of SOA was observed on a later sustained frontal positivity (300-400 ms post-visual stimulus onset), that is thought to reflect the conscious evaluation of emotional content, F(2,50) = 5.33, p = .01. For both components, no effect of SOA was observed in a subsequent control experiment in which both stimuli were presented but only a response to the expression of the face was required, F(2,32) = 2.80, p = .10 and F(2,32) = 1.26, p = .30. These results suggest that the rapid perceptual detection of the facial expression is independent of central attention. In contrast, the subsequent cognitive stage of conscious evaluation of emotional content does require central attention to proceed.

Remerciements: Conseil de recherches en sciences naturelles et en génie du Canada (CRSNG), Fonds québécois de la recherche sur la nature et les technologies (FRQNT)

Séance d’affiche – Visages et émotions

Spatial frequencies for accurate categorization and discrimination of facial expressions

Spatial frequencies for accurate categorization and discrimination of facial expressions

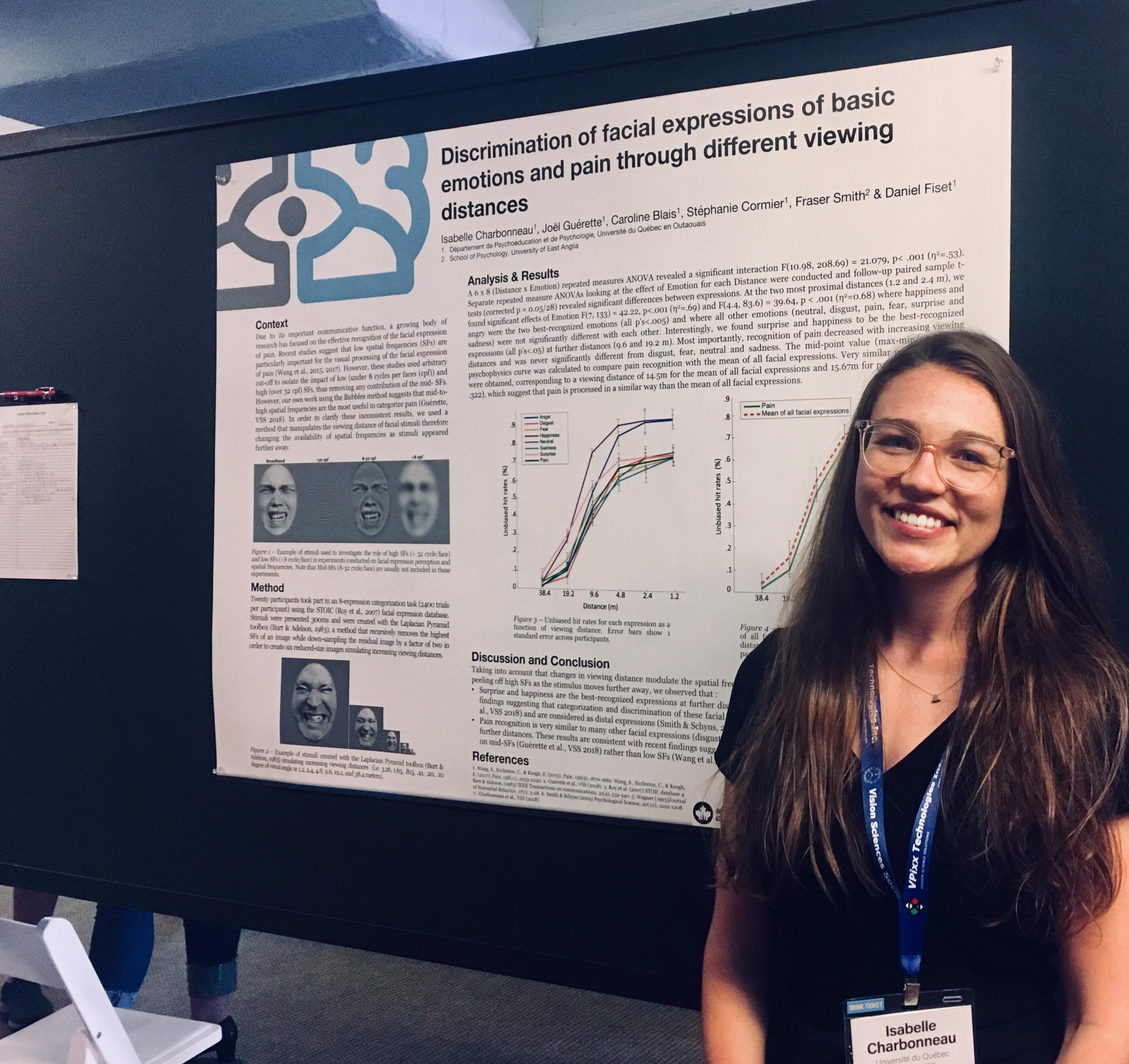

Isabelle Charbonneau1, Stéphanie Cormier 1 , Joël Guérette1 , Marie-Pier Plouffe-Demers1 , Caroline Blais1 , Daniel Fiset1 1 Université du Québec en Outaouais

VOIR LE AFFICHE

studies have examined the role of spatial frequencies (SFs) in facial expression perception. However, most of these studies used arbitrary cut-off to isolate the impact of low and high SFs (De Cesarei & Codispoti, 2012) thus removing possible contribution of mid-SFs. This present study aims to reveal the diagnostic SFs for each basic emotion as well as neutral using SFs Bubbles (Willenbockel et al., 2010). Forty participants were tested (20 in a categorization task, 20 in a discrimination task; 4200 trials per participant). In the categorization task, subjects were asked to identify the perceived emotion among all the alternatives. In the discrimination task, subjects were asked, in a block-design setting (block order was counterbalanced across participants), to discriminate between a target emotion (e.g fear) and all other emotions. Mean accuracy was maintained halfway between chance (i.e. 12.5% and 50% correct for each task, respectively) and perfect accuracy. In both tasks, accuracy for happiness and surprise is associated with low-SFs (peaking at around 5 cycles per face (cpf); Zcrit=3.45, p< 0.05 for all analysis) whereas accuracy for sadness and neutrality is associated with mid-SFs (peaking between 11.5 and 15 cpf for both tasks). Interestingly, the facial expressions of fear and anger reveal significantly different patterns of use across task. Whereas their correct categorization is correlated with the presence of mid-to-high SFs (peaking at 14 and 20 cpf for angry and fear, respectively) their accurate discrimination is correlated with the utilization of lower SFs (peaking at 4 and 3.7 cpf). These results suggest that the visual system is able to use low-SF information to detect and discriminate social threatening cues. However, higher-SFs are probably necessary in a multiple-choices categorization task to allow fine-grained discrimination.

Remerciement: Conseil de recherches en sciences naturelles et en génie du Canada (CRSNG)

Spatial frequencies for the visual processing of the facial expression of pain

Spatial frequencies for the visual processing of the facial expression of pain

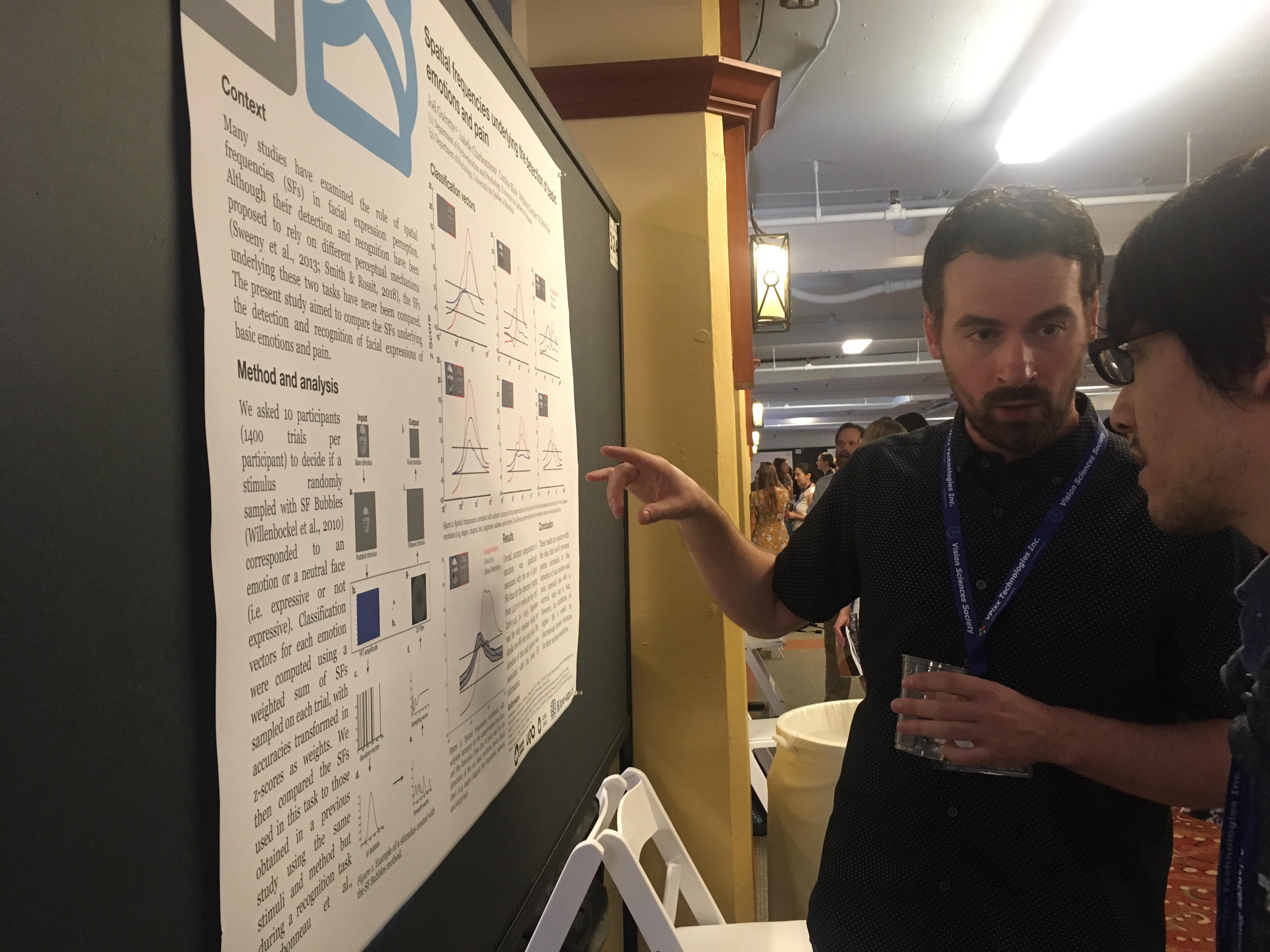

Joël Guérette1 , Stéphanie Cormier1 , Isabelle Charbonneau1 , Caroline Blais1 , Daniel Fiset1 ;

1 Département de psychoéducation et de psychologie, Université du Québec en Outaouais

VOIR AFFICHE

Recent studies suggest that low spatial frequencies (SFs) are particularly important for the visual processing of the facial expression of pain (Wang et al., 2015; 2017). However, these studies used arbitrary cut-off to isolate the impact of low (under 8 cycles per faces (cpf)) and high (over 32 cpf) SFs, thus removing any contribution of the mid-SFs. Here we compared the utilization of SFs for pain and other basic emotions in three tasks (20 participants per task), that is 1) a facial expression recognition task with all basic emotions and pain, 2) a facial expression discrimination task where one target expression needed to be discriminated from the others and 3) a facial expression discrimination task with only two choices (i.e. fear vs. pain, pain vs. happy). SF Bubbles were used (Willenbockel et al., 2010), a method which randomly samples SFs on a trial-by-trial basis, enabling us to pinpoint the SFs that are correlated with accuracy. In the first task, accurate categorization of pain was correlated with the presence of a large band of SFs ranging from 4.3 to 52 cpf peaking at 14 cpf (Zcrit=3.45, p< 0.05 for all analysis). In the second task, the correct discrimination of pain was correlated with the presence of a band of SFs ranging from 5 to 20 cpf peaking at 11 cpf. In the third task, we computed the classification vectors for pain-happiness and pain-fear conditions and revealed the overlapping SFs. In this task, SFs ranging from 2.7 to 13 cpf peaking at 7.3 cpf are significantly correlated with pain discrimination. Our results highlight the importance of the mid-SFs in the visual processing of the facial expression of pain and suggest that any method removing these SFs offers an incomplete account of SFs diagnosticity.

Remerciement: Conseil de recherches en sciences naturelles et en génie du Canada (CRSNG)

Séance d’affichage – Visages et différences individuelles

Task-specific extraction of horizontal information in faces

Task-specific extraction of horizontal information in faces

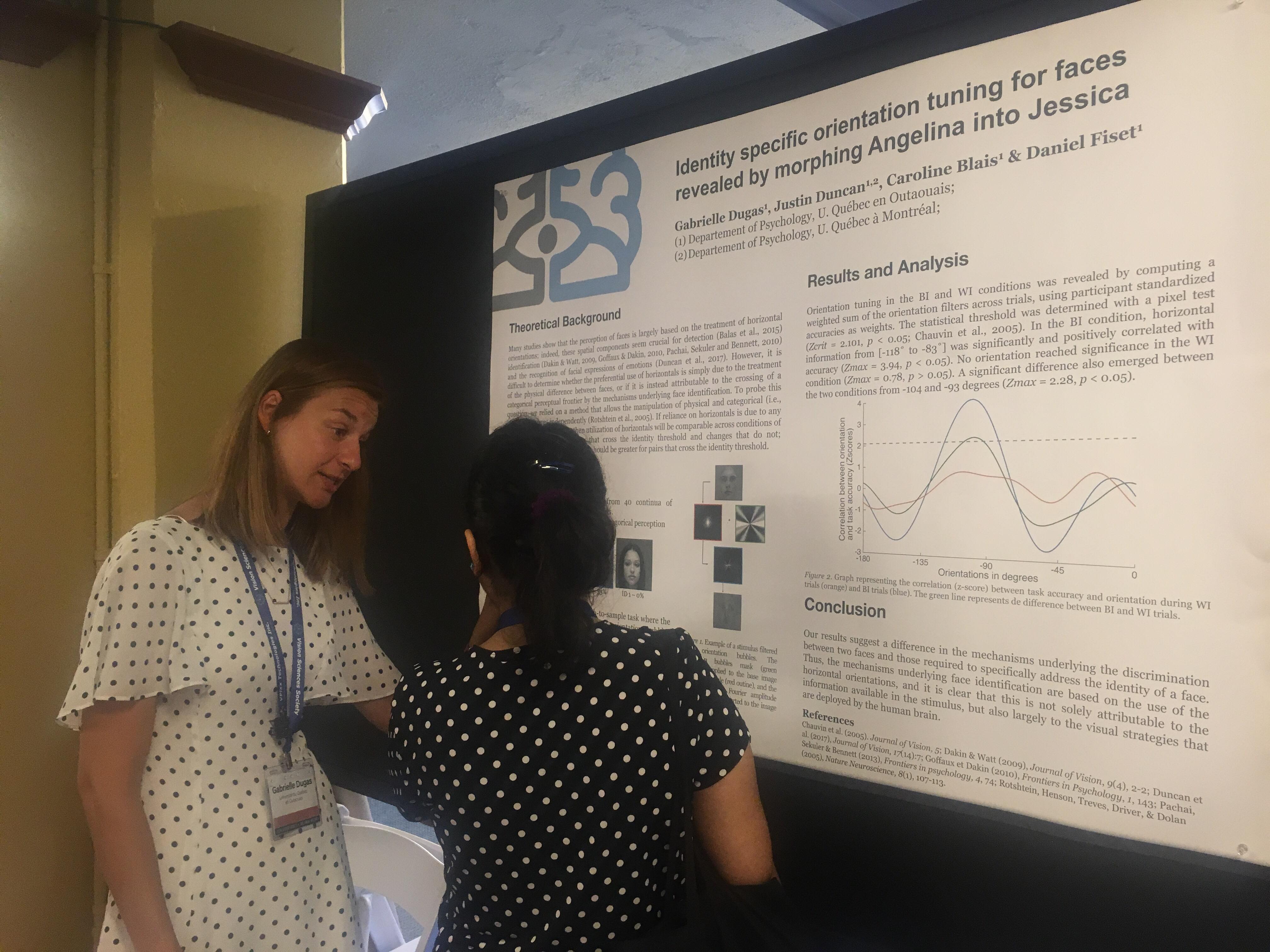

Gabrielle Dugas1 , Jessica Royer1 , Justin Duncan1, 2, Caroline Blais1 , Daniel Fiset1 ;

1 Université du Québec en Outaouais, 2 Université du Québec à Montréal

VOIR AFFICHE

Horizontal information is crucial for accurate face processing (Goffaux & Dakin, 2010). Individual differences in horizontal tuning were shown to correlate with aptitude levels in both face identification (Pachai, Sekuler & Bennett, 2013) and facial expression categorization (Duncan et al., 2017).These results thus indicate that the same visual information correlates with abilities in two different face processing tasks. Here, we intended to verify if the ability to extract horizontal information generalizes from one task to the other at the individual level. To do this, we asked 28 participants to complete both a 10-AFC face identification task and a race categorization (Caucasian vs. African-American) task (600 trials per task). To find out which parts of the orientation spectrum were associated with accuracy, images were randomly filtered with orientation bubbles (Duncan et al., 2017). We then performed, for each subject, what amounts to a multiple linear regression of orientation sampling vectors (independent variable) on response accuracy scores (dependent variable). A group classification vector (CV) was created by first summing individually z-scored CVs across subjects, and then dividing the outcome by √n, where n is the sample size. These analyses, performed separately for each task, show that horizontal information is highly diagnostic for both face identification (Zmax = 24.8) and race categorization (Zmax = 22.9), all ps < .05 and Group CVs of both task were highly correlated, r= .96, p< .001, showing high similarity in visual strategies at the group level. At the individual level, however, horizontal tuning measures (as per Duncan et al., 2017) in the identification and race categorization tasks did not correlate, r = -0.02, ns. Our results thus show that, although horizontal information is diagnostic for both tasks, individual differences in the extraction of this information appears to be task dependent.

Remerciement: Conseil de recherches en sciences naturelles et en génie du Canada (CRSNG)

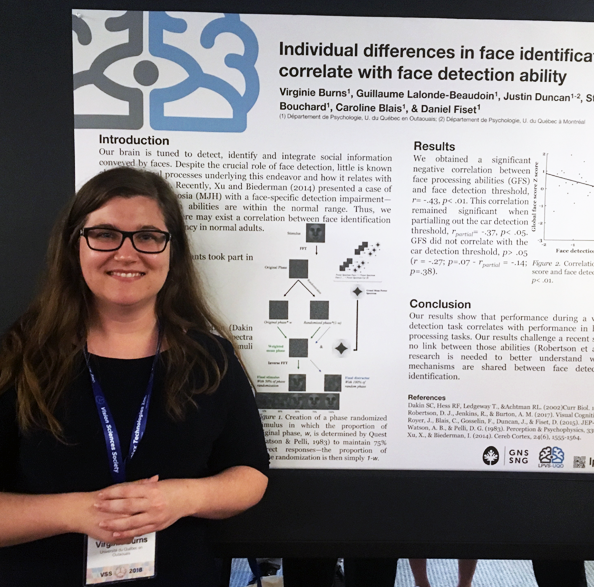

Individual differences in face identification correlate with face detection ability

Individual differences in face identification correlate with face detection ability

Virginie Burns1, Guillaume Lalonde-Beaudoin1 , Justin Duncan1,2, Stéphanie Bouchard1 , Caroline Blais1 , Daniel Fiset1

1 Département de Psychoéducation et de Psychologie, Université du Québec en Outaouais, 2 Département de Psychologie, Université du Québec à Montréal

VOIR AFFICHE

Our brain is tuned to detect, identify and integrate social information conveyed by faces. Despite the crucial role of face detection, little is known about the visual processes underlying this endeavor and how it is related to face identification. Recently, Xu and Biederman (2014) presented a case of acquired prosopagnosia with a face-specific detection impairment. Compared with controls, MJH needs significantly more visual signal for face detection, but not for car detection. Thus, we hypothesized that there may exist a correlation between face identification and detection proficiency in normal adults. Forty-five participants (24 women) performed the Cambridge Face Memory Test (CFMT; Duchaine, & Nakayama, 2006), the Cambridge Face Perception Test (CFPT; Duchaine, Germine, & Nakayama, 2007), and the Glasgow Face Memory Test (GFMT; Burton, White, & McNeil, 2010). They also completed two detection tasks : a face detection task and a car detection task. The power spectra were equalized across face and car stimuli. Individual face identification abilities were calculated by computing a weighted average of CFMT, GFMT, and CFPT scores (the latter of which was negatively scored). Face and car detection abilities were reflected by their respective detection thresholds, defined as phase spectrum coherence (as per Xu and Biederman, 2014). We observed a negative correlation between face identification ability scores and face detection thresholds (r = -.47 p< .01), which remained significant when computing the Spearman correlation (rs = -.42, p< .01). The correlation also remained significant when controlling for car detection ability (r = -.371, p< .05). Our results suggest that face detection and face identification share some perceptual or cognitive resources. More research will be needed to better understand what exactly is shared between these two tasks.

Remerciement: Conseil de recherches en sciences naturelles et en génie du Canada (CRSNG)

Séance d’affiche – Attention et différences individuelles

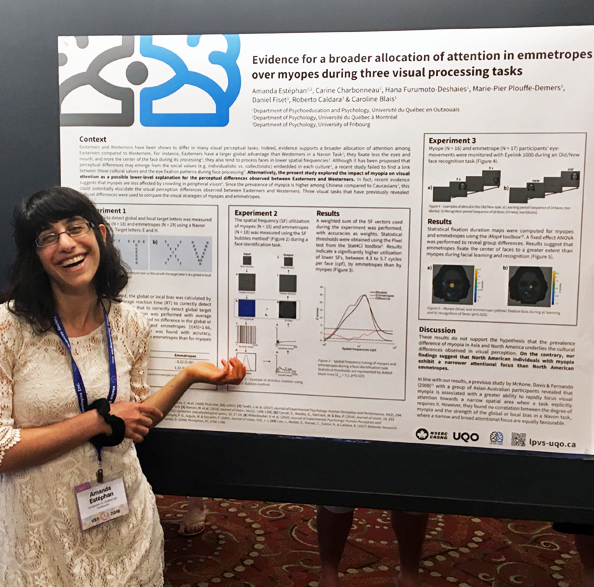

Evidence for a broader allocation of attention in emmetropes over myopes during three visual processing tasks

Evidence for a broader allocation of attention in emmetropes over myopes during three visual processing tasks

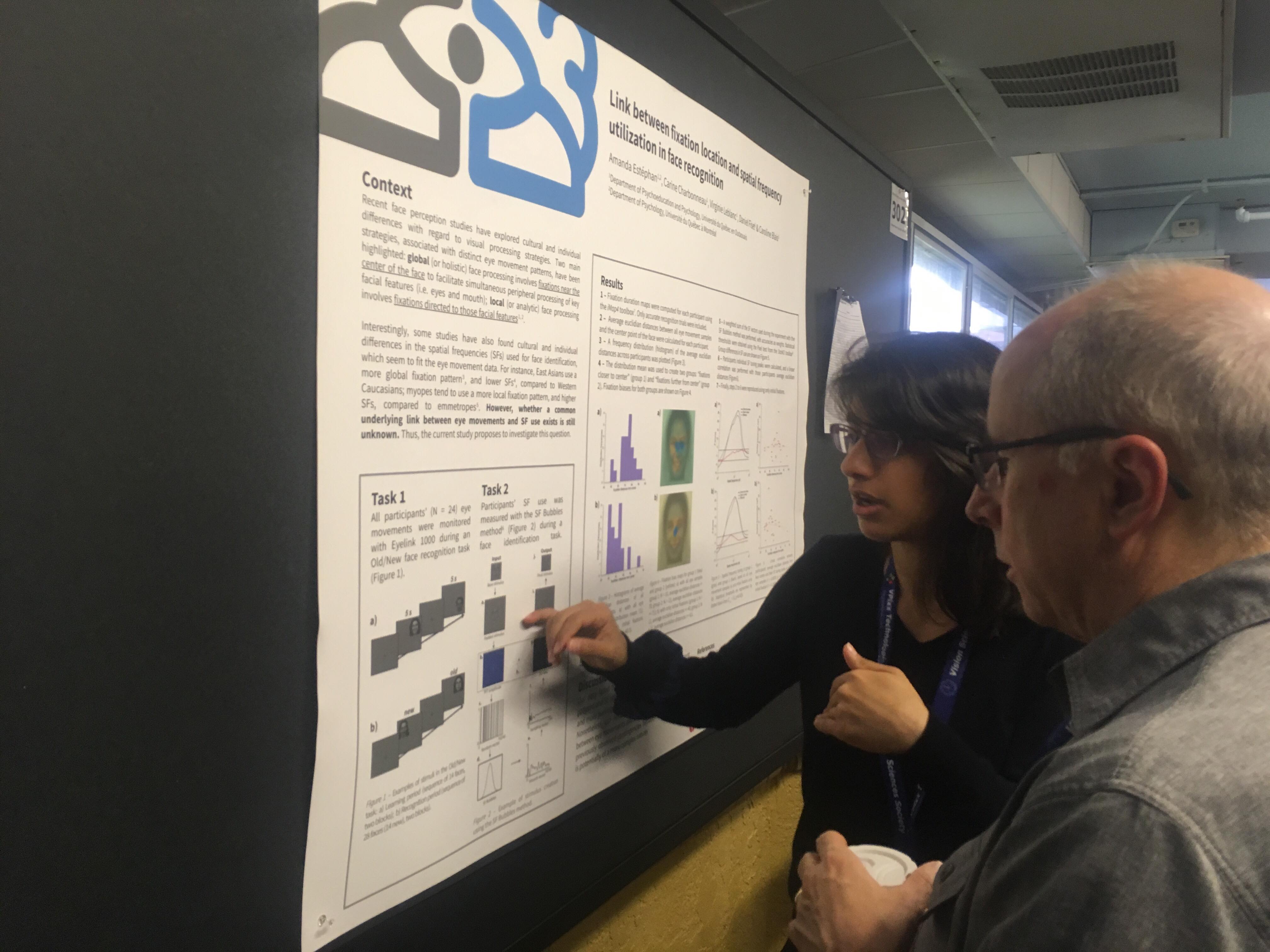

Amanda Estéphan1,2, Carine Charbonneau1 , Hana Furumoto-Deshaies1 , Marie-Pier PlouffeDemers1 , Daniel Fiset1 , Roberto Caldara3 , Caroline Blais1 ;

1 Département de Psychoéducation et Psychologie, Université du Québec en Outaouais, 2 Département de Psychologie, Université du Québec à Montréal, 3 Department of Psychology, University of Fribourg

VOIR AFFICHE

Last year (VSS, 2017), we explored the impact of myopia on visual attention as a possible explanation for the perceptual differences observed between Easterners and Westerners: namely, that Easterners have a larger global advantage than Westerners in a Navon Task (McKone et al., 2010); fixate less the eyes and mouth, and more the centre of the face during its processing (Blais et al., 2008); and tend to process faces in lower spatial frequencies (Tardif et al., 2017). Myopes and emmetropes were tested using Navon’s paradigm to measure their ability to detect global versus local target letters, and the Spatial Frequency (SF) Bubbles method (Willenbockel et al., 2010a) to measure their use of SFs during a face identification task: we initially found unexpected results suggesting that emmetropes were better than myopes at detecting global letters and that they used lower SFs than the latter group to correctly identity faces. Here, we delved deeper into this inquiry: a greater number of participants were tested with Navon’s paradigm (myopes = 18; emmetropes = 29) and with SF Bubbles (myopes = 15; emmetropes = 18). In addition, we measured participants eye-movements during another face recognition task (myopes = 11; emmetropes = 9). In support of our previous findings, our new results indicate that emmetropes have a higher global processing bias than myopes [t(45) = -3.269; p = 0.002], and make greater use of lower SFs, between 4.3 to 5.7 cycles per face [Stat4CI (Chauvin et al., 2005): Zcrit=-3.196, p< 0.025]. Finally, our eye-movement results suggest that emmetropes fixate the center of the face to a greater extent than myopes [analysis with iMap4 (Lao et al., in press)]. These findings offer a new avenue to explore how myopes and emmetropes process information contained in visual stimuli.

Remerciement: Conseil de recherches en sciences naturelles et en génie du Canada (CRSNG)

Séance d’affiche – Visages: Culture, familiarité et effet de l’autre ethnie

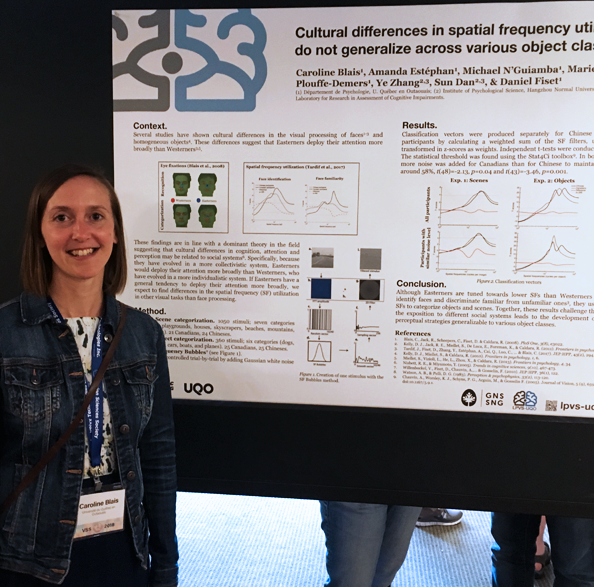

Cultural differences in spatial frequency utilisation do not generalize across various object classes

Cultural differences in spatial frequency utilisation do not generalize across various object classes

Caroline Blais1 , Amanda Estéphan1, 2, Michael N’Guiamba N’Zie1 , Marie-Pier Plouffe-Demers1 , Ye Zhang3,4, Dan Sun3,4, Daniel Fiset1 ;

1 Psychology department, University of Quebec in Outaouais, 2 Psychology department, University of Quebec in Montreal, 3 Institute of Psychological Sciences, Hangzhou Normal University, 4 Zhejiang Key Laboratory for Research in Assessment of Cognitive Impairments

VOIR AFFICHE

Several studies have shown cultural differences in the fixation patterns observed during tasks of different nature, e.g. face identification (Blais et al., 2008; Kelly et al., 2011), race categorization (Blais et al., 2008), and recognition of visually homogeneous objects (Kelly et al., 2010). These differences suggest that Easterners deploy their attention more broadly and rely more on extrafoveal processing than Westerners (Miellet et al., 2013). This finding is in line with a dominant theory in the field suggesting that cultural differences in cognition, attention and perception may be related to social systems (Nisbett & Miyamoto, 2005). Specifically, Easterners, because they have evolved in a more collectivistic system, would deploy their attention more broadly than Westerners, who have evolved in a more individualistic system. However, studies revealing cultural differences in fixation patterns during face processing have been challenged by the findings that two fixations suffice for face recognition (Hsiao & Cottrell, 2008), and that early fixations are not modulated by culture (Or, Peterson & Eckstein, 2015). Since deploying attention over a broader area has been shown to modulate the spatial resolution, directly assessing the spatial frequency (SF) utilisation underlying stimulus recognition would help clarify the impact of culture on perceptual processing. Here, we present a set of four experiments in which the SF used by Easterners and Westerners were measured while they identified faces, discriminated familiar from unfamiliar faces, and categorized object and scenes. The results reveal that Easterners are tuned towards lower SF than Westerners when they identify faces and discriminate familiar from unfamiliar ones (Tardif et al., 2017), but use the same SF to categorize objects and scenes. Together, these results challenge the view that the exposition to different social systems leads to the development of different perceptual strategies generalizable to various object classes.

Remerciement: Conseil de recherches en sciences naturelles et en génie du Canada (CRSNG)

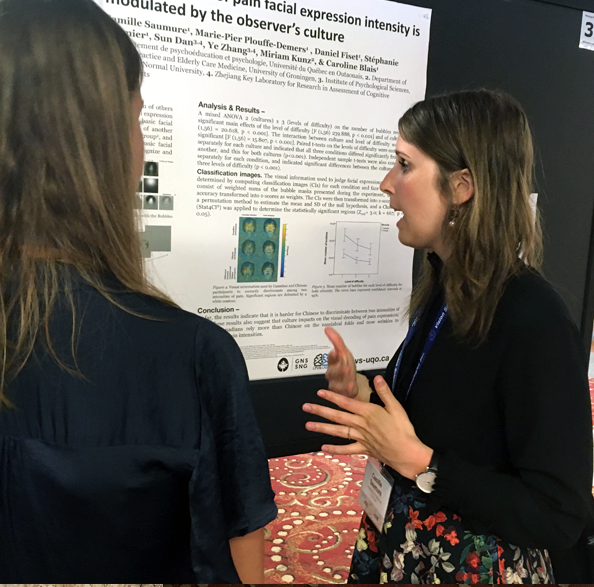

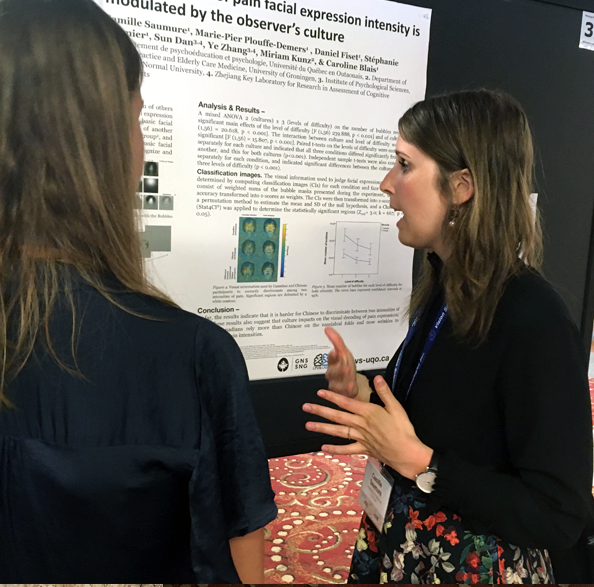

The impact of culture on visual strategies underlying the judgment of facial expressions of pain.

The impact of culture on visual strategies underlying the judgment of facial expressions of pain.

Camille Saumure1, Marie-Pier Plouffe-Demers1 , Daniel Fiset1 , Stéphanie Cormier1 , Dan Sun3,4, Zhang Ye3,4, Miriam Kunz2 , Caroline Blais1 ;

1 Département de psychoéducation et de psychologie, Université du Québec en Outaouais, 2 Department of General Practice and Elderly Care Medicine, University of Groningen, 3 Institute of Psychological Sciences, Hangzhou Normal University, 4 Zhejiang Key Laboratory for Research in Assessment of Cognitive Impairments

VOIR AFFICHE

Research has revealed that observers’ ability to recognize basic facial emotions expressed by individuals of another ethnic group is poor (Elfenbein, & Ambady, 2002), and that culture modulates the visual strategies underlying the recognition of basic facial expressions (Jack et al., 2009; Jack, Caldara, Schyns, 2012; Jack et al., 2012). Although it has been suggested that pain expression has evolved in order to be easily detected (Williams, 2002), the impact of culture on the visual strategies underlying the recognition of pain facial expressions remains underexplored. In this experiment, Canadians (N=28) and Chinese (N=30) participants were tested with the Bubbles method (Gosselin & Schyns, 2001) to compare the facial features used to discriminate between two pain intensities. Stimuli consisted of 16 face avatars (2 identities x 2 ethnicities x 4 levels of intensity difference) created with FACEGen and FACSGen. The amount of facial information needed to reach an accuracy rate of 75% was higher for Chinese (M=93.3, SD=25.04) than for Canadian participants (M=47.2, SD=48.02) [t(44.3)=-4.63, p< 0.001], suggesting that it was harder for Chinese to discriminate among two pain intensities. Classification images representing the facial features used by participants were generated separately for Asian and Caucasian faces. Statistical thresholds were found using the cluster test from Stat4CI (Chauvin et al, 2005; Zcrit=3.0; k=667; p< 0.05). Canadians used the eyes, the wrinkles between the eyebrows and the nose wrinkles/upper lip area with both face ethnicities. Chinese used the eye area with Asian faces, but no facial area reached significance with Caucasian faces. Compared with Chinese participants, Canadians relied more on the nose wrinkles area (Zcrit=3.0; k=824; p< 0.025). Together, these results suggest that culture impacts the visual decoding of pain facial expressions.

Remerciement: Conseil de Recherches en Sciences Humaines

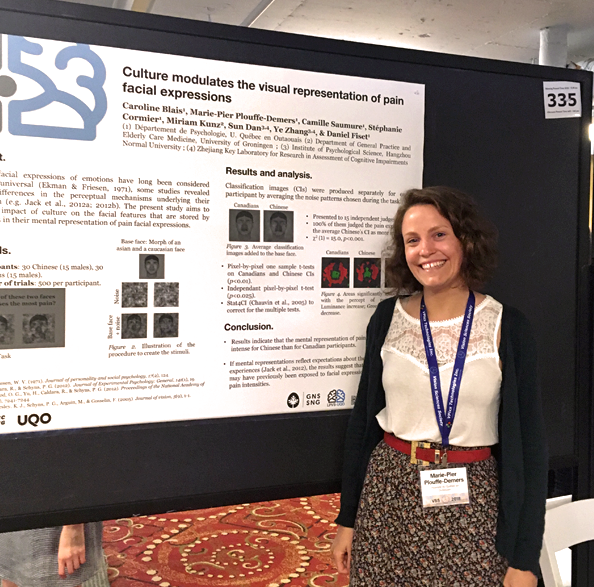

The impact of culture on the visual representation of pain facial expressions

The impact of culture on the visual representation of pain facial expressions

Marie-Pier Plouffe Demers 1, Camille Saumure1 , Stéphanie Cormier1 , Daniel Fiset1 , Miriam Kunz2 , Dan Sun3,4, Zhang Ye3,4, Caroline Blais1 ;

1 Department of psychoeducation and psychology, Université du Québec en Outaouais, 2 Department of General Practice and Elderly Care Medicine, University of Groningen, 3 Institute of Psychological Sciences, Hangzhou Normal University, 4 Zhejiang Key Laboratory for Research in Assessment of Cognitive Impairments

VOIR AFFICHE

Some studies suggest that communication of pain is connected to the evolution of human race and has evolved in a way to increase an individual’s chance of survival (Williams, 2002). However, even though facial expressions of emotions have long been considered culturally universal (Izard, 1994; Matsumoto & Willingham, 2009), some studies revealed cultural differences in the perceptual mechanisms underlying their recognition (e.g. Jack et al., 2009; Jack et al., 2012). The present study aims to verify the impact of culture on the facial features that are stored by individuals in their mental representation of pain facial expressions. In that respect, observer-specific mental representations of 60 participants (i.e. 30 Caucasians, 30 Chineses) have been measured using the Reverse Correlation method (Mangini & Biederman, 2004). In 500 trials, participants chose, from two stimuli, the face that looked the most in pain. For each trial, both stimuli would consist of the same base face (i.e. morph between average Asian and Caucasian avatars showing low pain level) with random noise superimposed, one with a random noise pattern added, and the other the same pattern subtracted. We generated a classification image (CI) for each group by averaging noise patterns chosen by participants. The cultural impact on mental representations was measured by subtracting the Caucasian CI from the Chinese CI, to which was applied a Stat4CI cluster test (Chauvin et al., 2005). Results indicate significant differences in the mouth and left eyebrow areas (ZCrit=3.09, K=167, p< 0.025), and suggest a mental representation of pain facial expression of greater intensity for Chinese participants. Given that mental representations reflect expectations about the world based on past experiences (Jack et al., 2012), the results suggest that Chinese participants may have previously been exposed to facial expressions displaying greater pain intensities.

Remerciement: Conseil de Recherches en Sciences Humaines

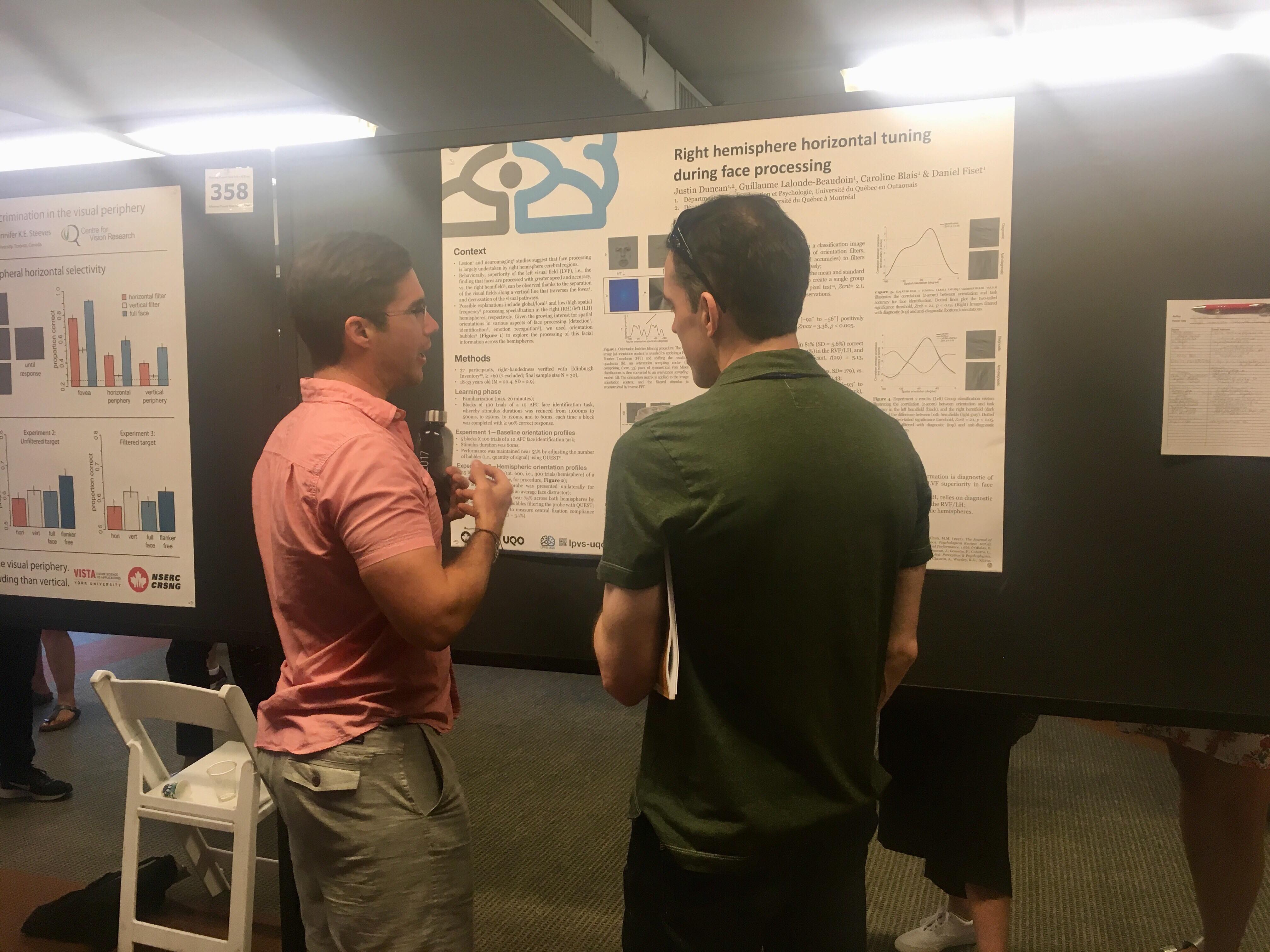

Right hemisphere horizontal tuning during face processing

Right hemisphere horizontal tuning during face processing

Variation of empathy in viewers impacts facial features encoded in their mental representation of pain expression.

Variation of empathy in viewers impacts facial features encoded in their mental representation of pain expression. The impact of gender on visual strategies underlying the discrimination of facial expressions of pain

The impact of gender on visual strategies underlying the discrimination of facial expressions of pain

Ashley Nixon Photography. De gauche à droite:

Ashley Nixon Photography. De gauche à droite:

Essai doctoral intitulé : Différences individuelles dans les habiletés d’identification et l’utilisation de l’information visuelle des visages

Essai doctoral intitulé : Différences individuelles dans les habiletés d’identification et l’utilisation de l’information visuelle des visages

N170 sensitivity to the horizontal information of facial expressions

N170 sensitivity to the horizontal information of facial expressions Cortical activation of fearful faces requires central resources: multitasking processing deficits revealed by event-related potentials

Cortical activation of fearful faces requires central resources: multitasking processing deficits revealed by event-related potentials Spatial frequencies for accurate categorization and discrimination of facial expressions

Spatial frequencies for accurate categorization and discrimination of facial expressions Spatial frequencies for the visual processing of the facial expression of pain

Spatial frequencies for the visual processing of the facial expression of pain Task-specific extraction of horizontal information in faces

Task-specific extraction of horizontal information in faces Individual differences in face identification correlate with face detection ability

Individual differences in face identification correlate with face detection ability Evidence for a broader allocation of attention in emmetropes over myopes during three visual processing tasks

Evidence for a broader allocation of attention in emmetropes over myopes during three visual processing tasks Cultural differences in spatial frequency utilisation do not generalize across various object classes

Cultural differences in spatial frequency utilisation do not generalize across various object classes The impact of culture on visual strategies underlying the judgment of facial expressions of pain.

The impact of culture on visual strategies underlying the judgment of facial expressions of pain.  The impact of culture on the visual representation of pain facial expressions

The impact of culture on the visual representation of pain facial expressions